Resources

- Slides

- Exercise Slides

- add recording when available – didn’t attend in person

The Scientific Method

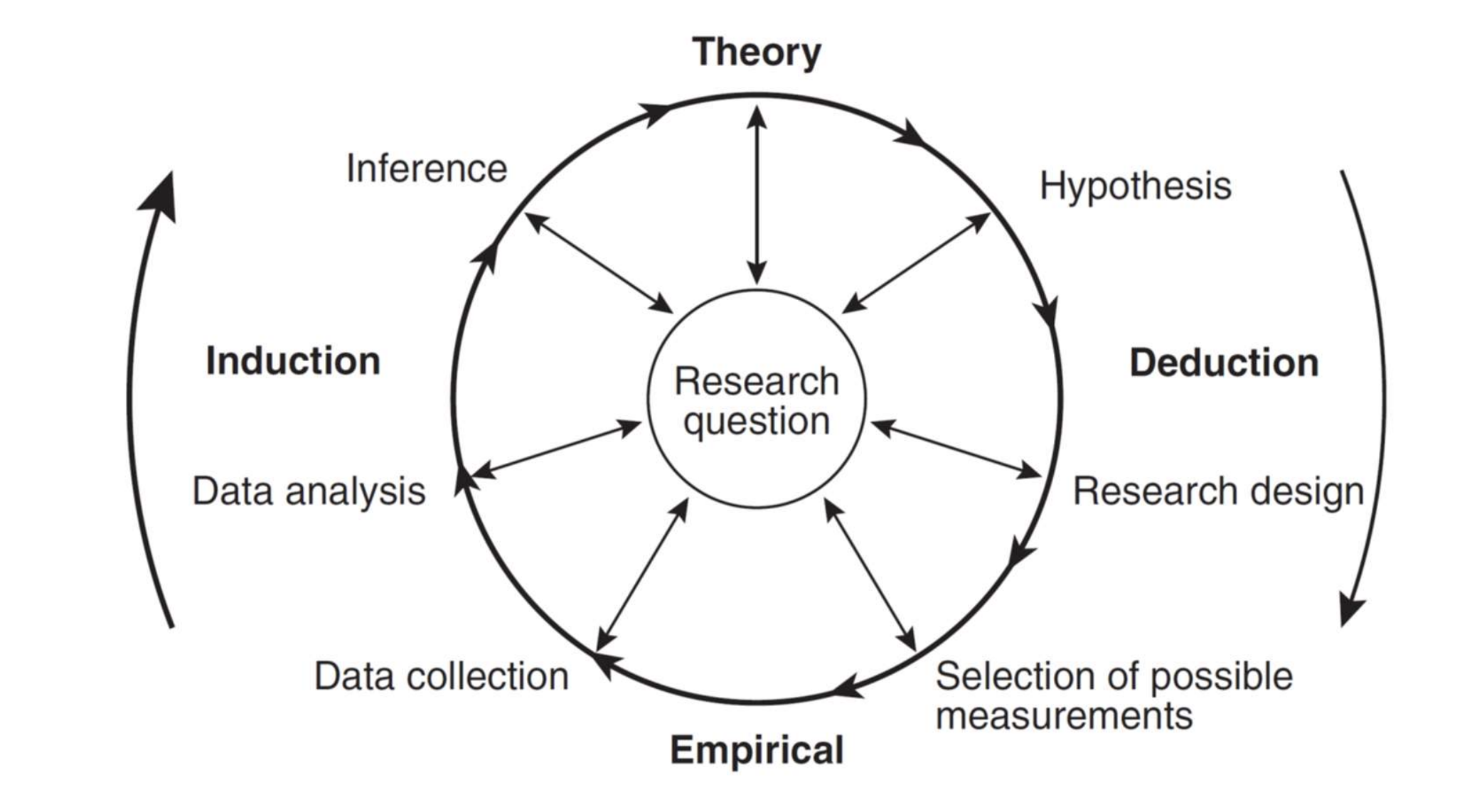

The hypothetico-deductive model is a common framework for structuring scientific inquiry. (p. 6)

Hypothetico-Deductive Model

Defining a Hypothesis

A hypothesis is an informed speculation (ideally derived from a theory) about the possible relationship between two or more variables. It is often formulated as an “If…, then…” statement. (p. 8)

Hypothesis Critera (p. 15)

- Falsifiability: It must be possible to refute the hypothesis through empirical evidence.

- Empirical Content: Refers to the number of ways a hypothesis can be falsified. A higher empirical content means the hypothesis is more testable and, if it survives testing, more informative. This is influenced by:

- Level of Universality: How general is the “if” component? A more general “if” part increases empirical content.

- Degree of Precision: How specific is the “then” component? A more specific “then” part increases empirical content. Example: “Yearly feedback improves employee satisfaction” has higher precision than “Yearly feedback affects employee satisfaction.” (p. 16)

Types of Variables

Understanding the different roles variables can play is crucial for designing research and interpreting results. (p. 14)

Independent Variable (IV)

The “if” component of a hypothesis. This is the variable whose effect one wants to understand or manipulate; the predictor.

Dependent Variable (DV)

The “then” component of a hypothesis. This is the variable that depends on (i.e., is predicted by) the independent variable.

Confounder Variable

An extraneous variable that has a causal relationship to both the independent and the dependent variable and that is responsible for the correlation between them.

Example: Ice cream sales (DV) and drowning accidents (IV) are correlated, but the confounder is high temperatures, which causes increases in both. (p. 11)

Mediator Variable

An intervening variable that reflects the mechanism leading to the correlation between the independent and the dependent variable. It explains how or why the relationship exists.

Example: Yearly feedback (IV) increases perceived appreciation (Mediator), which in turn increases employee satisfaction (DV). (p. 12)

Moderator Variable

A variable that affects the direction or strength of the correlation between the independent and the dependent variable. It explains when or for whom the relationship holds.

Example: The effect of feedback meetings (IV) on employee satisfaction (DV) might be stronger for less experienced employees (Moderator) than for more experienced ones. (p. 13)

Testing a Hypothesis

Operationalization

Operationalization is the process of translating abstract theoretical constructs into measurable, empirical variables. This moves a hypothesis from the conceptual level (“If A, then B”) to the empirical level (“If measurable X, then measurable Y”). (p. 18)

Examples: The theoretical construct “personality” can be operationalized using the TIPI questionnaire. “Effective leadership” can be operationalized by measuring employee satisfaction. (p. 19)

Reliability

Reliability refers to the consistency and stability of a measurement. (p. 21)

- Test-Retest Reliability: Assesses stability over time by correlating scores from the same test administered on two different occasions.

- Internal Reliability: Assesses the consistency of results across items within the same test. It is commonly measured using Cronbach’s Alpha (α), which calculates the average inter-item correlation. A value of α ≥ 0.8 is generally considered good. ; k: item count; r̄: average inter-item correlation

Validity of a Measure

Validity refers to how well a measure captures what it is intended to measure. (p. 22)

- Construct Validity: How well the measure captures the underlying theoretical construct. It is assessed through:

- Convergent Validity: High correlation with other measures of similar constructs.

- Divergent Validity: Low correlation with measures of unrelated constructs.

Validity of a Research Design

This refers to how well a study measures what it sets out to measure, or how well it reflects the reality it claims to represent. (p. 23)

- Internal Validity: The degree to which a study can firmly establish a causal link between the IV and DV, free from the influence of confounders.

- External Validity: The degree to which the findings can be generalized to other people, settings, and situations outside of the specific research context.

- An increase in one type of validity often comes at the cost of the other.

Example: A study on virtual team creativity has high internal validity if differences in creativity can be unambiguously attributed to the virtual vs. in-person setting. It has high external validity if the results from the specific task used can be applied to real-world creative problems. (p. 24)

Research Approaches (p. 25)

- Correlational Research: Variables are observed as they naturally occur without manipulation. This method can identify relationships but cannot confirm causality.

- Experimental Research: The independent variable is actively manipulated by the researcher, and participants are randomly assigned to different conditions (e.g., experimental vs. control group) to observe the effect on the dependent variable. This allows for causal inferences.

- Quasi-Experimental Approach: The independent variable is manipulated, but the assignment of participants to conditions is not random. This approach is often used when random assignment is not feasible, but it results in lower internal validity compared to a true experiment. (p. 27)

Independent Variable Manipulation (p. 26)

- Between-Subjects Design: Different groups of participants are assigned to different levels of the independent variable.

- Within-Subjects Design: The same group of participants is exposed to all levels of the independent variable (also known as repeated measures).